September 29, 2025

A Practical Architecture for Intelligence at the Edge

Type

Deep DivesContributors

Murat Kilicoglu

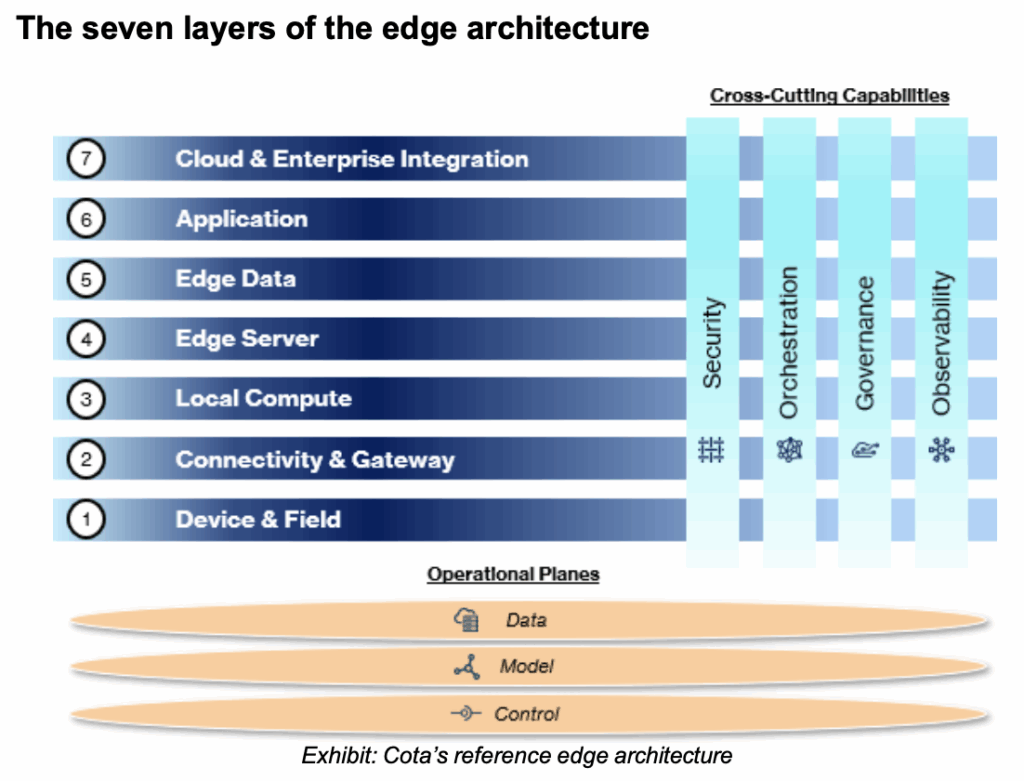

Walk into a warehouse, a hospital operating room, or a quick‑service restaurant, and you will notice a shared challenge: useful decisions often need to happen where the data is born, not thousands of miles away. At Cota, we describe this as intelligence at the edge: the practical interplay of sensing, local inference, and action, with the cloud in the loop for heavier inference, fleet operations, and observability.

Below is a practical edge architecture that we believe is durable across a range of real‑world projects. In our experience, specific workloads, vendors, and budgets vary, but the interfaces, processes, and economic value expected from solutions are similar. Our working map of this system is based on what we have seen across companies and sectors, and it aims to be useful without assuming there is only one right way.

An overview of the simulation workflow

- Device & Field Layer: The physical interface to the world: cameras, sensors, satellites, robots, wearables. Each speaks its own dialect and fails in its own ways, often in harsh conditions. The choices here set reliability and openness for everything above; if signals are trapped behind fragile drivers or proprietary protocols, scale suffers. The mix shifts by site: retail leans on cameras and POS links, healthcare adds imaging probes and bedside monitors, manufacturing brings PLCs and SCADA, and agriculture blends soil sensors, drones, and weather stations.

- Connectivity & Gateway Layer: Gateways turn field protocols (Modbus, OPC UA, BLE) into IP messaging (often MQTT), enforce local policies like rate limiting and data minimization, and buffer when links drop. They typically maintain a cloud-visible “device twin” so operations can see the state and push configuration. Standardizing here avoids one-off integrations and keeps sites operating through network incidents. In logistics and retail, gateway duties can live on existing network devices; in industrial settings, they are typically ruggedized boxes segmented from control networks.

- Local Compute Layer: Small single-board computers on or near the edge devices handle preprocessing, simple rules, and enabling compact models. By filtering raw streams and enforcing guardrails locally, they cut bandwidth and help hit sub-100 ms decisions or privacy-sensitive needs like on-device face blurring. Placement varies, from smart cameras to on-robot processors to wall-mounted boxes, but the role stays the same: shrink data early, run inference quickly, and keep loops close to the work.

- Edge Server Layer: Often rugged, standalone edge servers with GPUs/CPUs host larger models (multi-stream vision, audio, multimodal LLMs) and shared services such as model servers and local APIs. Increasingly, they also run RAG with a local vector store of site documents or product catalogs. This is often where response time targets, cloud cost control, and data residency requirements align. Teams typically orchestrate apps as containers (K3s/KubeEdge/Nomad) and allocate resources per model or stream; depending on workload, a single GPU can serve dozens of filtered camera feeds.

- Edge Data Layer: Within a site, an event bus moves messages, short-term stores keep time series and object data, vector databases support onsite RAG, and a small set of schemas and metadata make signals interpretable across locations. With a consistent data layer, new models and services slot in without replumbing every endpoint. Connected sites selectively sync into a data warehouse.

- Application Layer: Here lives the business logic: loss prevention, walk-out check-out, predictive maintenance, line balancing, patient triage, and energy optimization, among others. Applications blend inference with policy rules (for example, escalating to a person below a certain confidence threshold) and simple UIs or alerts. Reusable components such as detectors, trackers, OCR, and prompts let teams bring the second and third use cases to the same footprint quickly, while UI surfaces and workflows adapt to local roles and compliance.

- Cloud & Enterprise Integration Layer: Think of this as the connection to the “mothership” that keeps every site in step with the business. It regularly syncs with core systems such as ERP, EAM, APM, ticketing, identity, and a company’s data lake/warehouse, so parts, work orders, users, and records stay consistent. It also provides a safe path to push trusted software and model updates and to pull back the signals that matter – health, metrics, and selected data for fleet-wide learning and oversight. With this backbone, sites can run locally when needed yet share improvements and remain auditable. The exact shape varies; some enterprises favor private clouds, others public, but the aim is the same: a dependable way to update, observe, and integrate the edge with the core enterprise so the extended enterprise can function effectively.

Across these layers, several cross-cutting capabilities run continuously: security (network, data, and access to industrial controls); observability (device health, inference latency, and model performance); packaging and orchestration (lightweight Kubernetes or equivalents with over the air updates); and governance (data minimization, retention windows, human review for sensitive actions, and audit logging). These functions enable consistent performance and auditability, allowing multiple sites to be operated as a single fleet and ensuring compliance with company and industry policies.

Before talking about deployment patterns, it helps to introduce a lens we see show up across vendors and teams: three “planes” that separate core activities. They are common because they mirror how operations are actually owned and measured. The data plane moves bits (signals in, summaries/insights out); the model plane evolves AI models and their artifacts; and the control plane governs fleet behavior (policy, feature flags, remote config, and attestation).

These planes appear differently depending on where you run. In air‑gapped deployments (defense, maritime, mining, certain clinical settings), nearly everything runs locally. The payoff is sovereignty and reliability; the trade‑off is more capex and slower cross‑site learning. With cloud‑in‑the‑loop, common in retail, smart buildings, and logistics depots, sites operate autonomously but sync models, metrics, and selected data upstream, so centralized training and governance can lift the fleet while latency‑sensitive loops stay local. Many systems use split or hierarchical inference – device or gateway compute filters frames and runs compact models, the edge server hosts heavier models and shared services, and the cloud trains, registers, and orchestrates.

How this architecture could change by vertical

The core stack is stable, the knobs change. Here are a few examples by industry:

In retail, devices may include cameras, scales, and shelf sensors; the gateway often integrates with POS and store Wi‑Fi; the edge server might run multi‑camera vision, SKU recognition, and a local product‑catalog RAG. Commercial impact can show up as less shrinkage, faster checkout, or better labor allocation, and the same footprint can later add queue monitoring or energy optimization.

In healthcare, devices can include imaging probes, bedside monitors, and cameras. Gateways usually meet medical‑device‑grade management and audit. Edge compute lives on‑device or in a small edge server on-cart or in a shared IT room. We have seen benefits such as faster triage, fewer repeat scans, lower infection rates, and more consistent quality under staffing constraints as potential benefits.

In manufacturing, PLCs/HMIs, vision cameras, and control system frameworks like SCADA are highly common. Gateways are typically segmented and read‑only by default. Edge compute can handle defect detection and anomaly prediction; closed‑loop control is allowed only under safety interlocks. The value tends to be lower scrap, higher equipment uptime, increased productivity, and just-in-time inventory and procurement management.

In buildings and campuses, occupancy sensors, badge readers, and HVAC meters combine with light vision workloads; local RAG over maintenance logs may help technicians work faster.

Savings frequently come from energy optimization and better space use, and this domain often provides an approachable path to multi‑app expansion on the same hardware.

In logistics and mobility, the edge server may run in the depot or on vehicles/robots with periodic offload to a micro data center for learning and route planning. The payoffs can include higher pick accuracy, fewer misloads, and safer operations.

Across all of these, the seven layers and three planes remain a consistent starting point. Use cases, latency targets, privacy policies, and cost thresholds vary by industry and by site, but the economic value tends to be grouped around higher revenue, lower costs, and more effective risk management.

Why we think this architecture is poised to win

Intelligence at the edge puts decisions at the moment of value. Then it carries that capability across messy, bandwidth and compute-tight sites. The layered stack makes ownership clear. The three operating planes make updates easier and safer. The deployment patterns enable enterprises to choose the right trade-offs between speed, cost, and control tailored to their industry.

Once the footprint is in place, additional applications are mostly software. You reuse the same sensors, servers, and data layer, so expansion revenue can rise while time to the second use case falls. Total cost of ownership improves as edge filtering trims bandwidth and cloud bills, and centralized knowledge sharing and governance spread gains across the fleet.

Privacy and compliance get simpler when sensitive data stays on site. Data gravity works in favor of companies as well: as companies ship summaries, features, and insights instead of raw large data streams, models keep improving without overwhelming storage and compute availability.

When we meet founders who are modular where it matters and opinionated where it counts, we tend to see platforms that learn faster, fail less often and in a safer manner, and scale more cleanly. That is the kind of edge intelligence we are excited about at Cota, and one we believe can power a meaningful share of the next wave of industry‑scale AI.